Big Data

John Samuel

CPE Lyon

Année: 2021-2022

Courriel: john(dot)samuel(at)cpe(dot)fr

$ tail /var/log/apache2/access.log

127.0.0.1 - - [14/Nov/2018:14:46:49 +0100] "GET / HTTP/1.1" 200 3477 "-"

"Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:63.0) Gecko/20100101 Firefox/63.0"

127.0.0.1 - - [14/Nov/2018:14:46:49 +0100] "GET /icons/ubuntu-logo.png HTTP/1.1" 304 180 "http://localhost/"

"Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:63.0) Gecko/20100101 Firefox/63.0"

127.0.0.1 - - [14/Nov/2018:14:46:49 +0100] "GET /favicon.ico HTTP/1.1" 404 294 "-"

"Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:63.0) Gecko/20100101 Firefox/63.0"

$ tail /var/log/apache2/error.log

[Wed Nov 14 09:53:39.563044 2018] [mpm_prefork:notice] [pid 849]

AH00163: Apache/2.4.29 (Ubuntu) configured -- resuming normal operations

[Wed Nov 14 09:53:39.563066 2018] [core:notice] [pid 849]

AH00094: Command line: '/usr/sbin/apache2'

[Wed Nov 14 11:35:35.060638 2018] [mpm_prefork:notice] [pid 849]

AH00169: caught SIGTERM, shutting down

$ cat /etc/apache2/apache2.conf

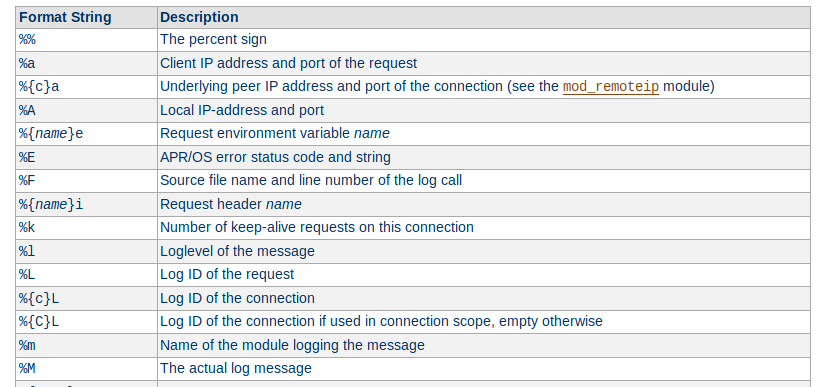

LogFormat "%v:%p %h %l %u %t \"%r\" %>s %O \"%{Referer}i\" \"%{User-Agent}i\"" vhost_combined

LogFormat "%h %l %u %t \"%r\" %>s %O \"%{Referer}i\" \"%{User-Agent}i\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %O" common

LogFormat "%{Referer}i -> %U" referer

LogFormat "%{User-agent}i" agent

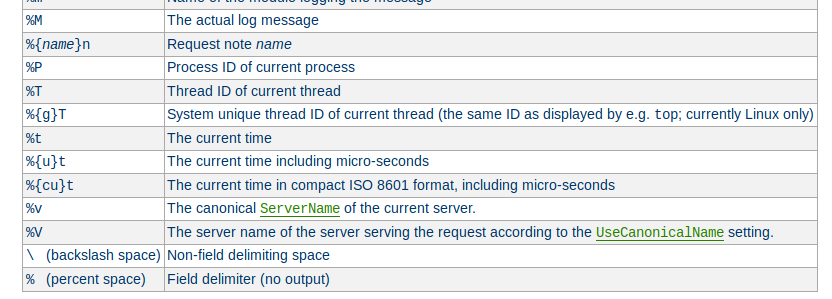

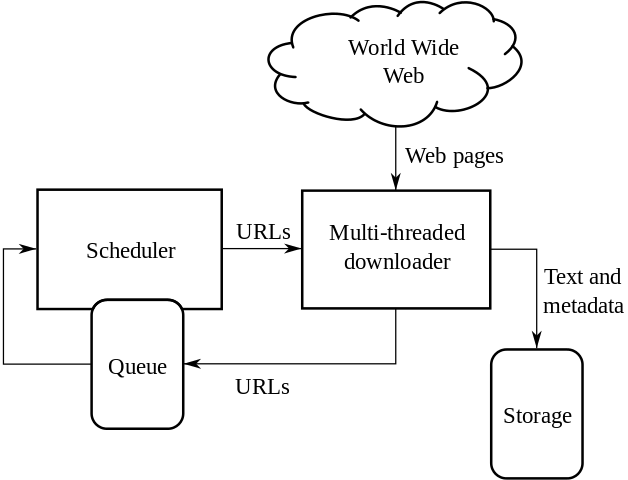

from urllib import request

response = request.urlopen("https://en.wikipedia.org/wiki/Main_Page")

html = response.read()

from urllib import request

from lxml import html

document = html.parse(request.urlopen("https://en.wikipedia.org/wiki/Main_Page"))

for link in document.iter("a"):

if(link.get("href") is not None):

print(link.base_url+link.get("href"))

import requests

url = "https://api.github.com/users/johnsamuelwrites"

response = requests.get(url)

print(response.json())

import requests

url = "https://api.github.com/users/johnsamuelwrites/repos"

response = requests.get(url)

print(response.json())

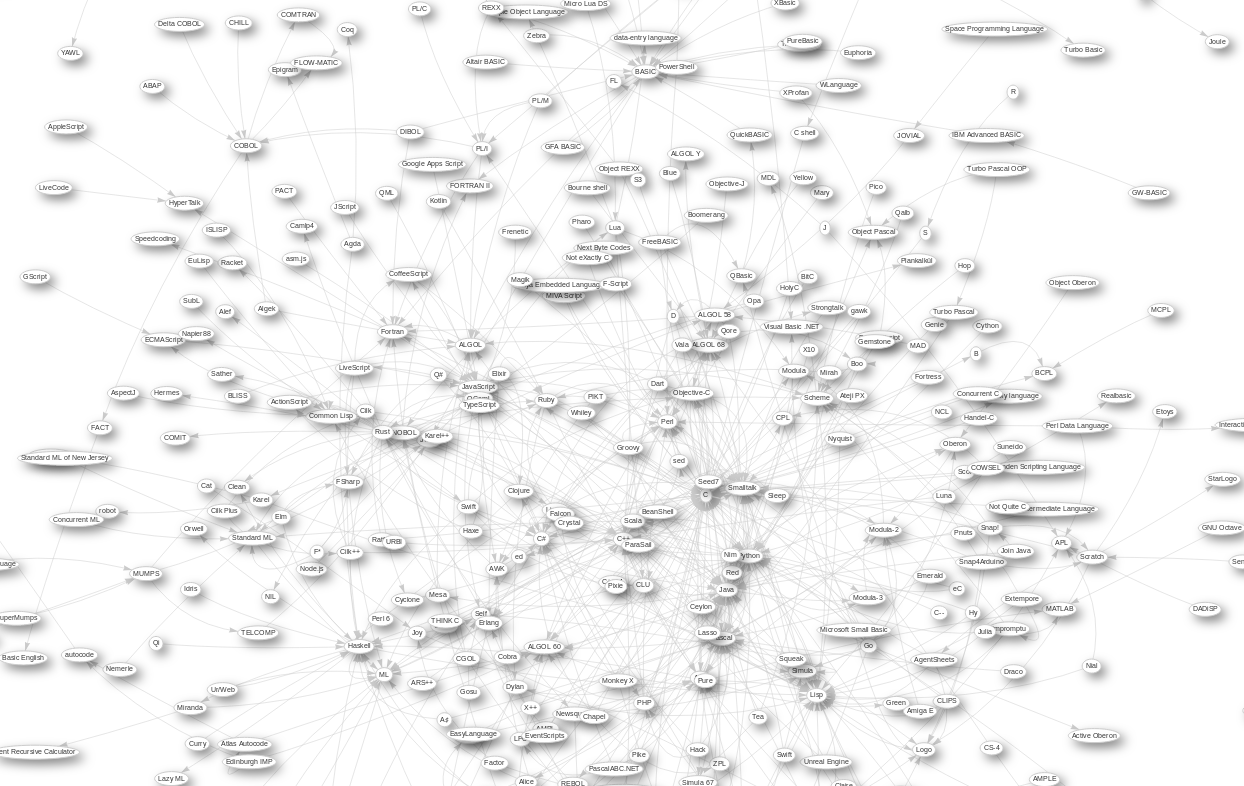

from SPARQLWrapper import SPARQLWrapper, JSON

sparql = SPARQLWrapper("http://query.wikidata.org/sparql")

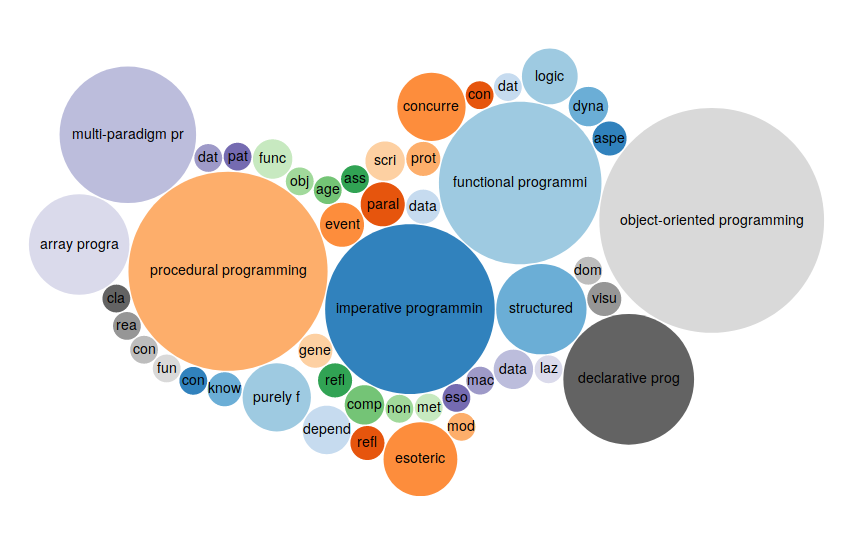

sparql.setQuery("""

SELECT ?item WHERE {

?item wdt:P31 wd:Q9143;

}

LIMIT 10

""")

sparql.setReturnFormat(JSON)

results = sparql.query().convert()

for result in results["results"]["bindings"]:

print(result)

- Wikidata.png)

.png)

La gestion des connaissances clients

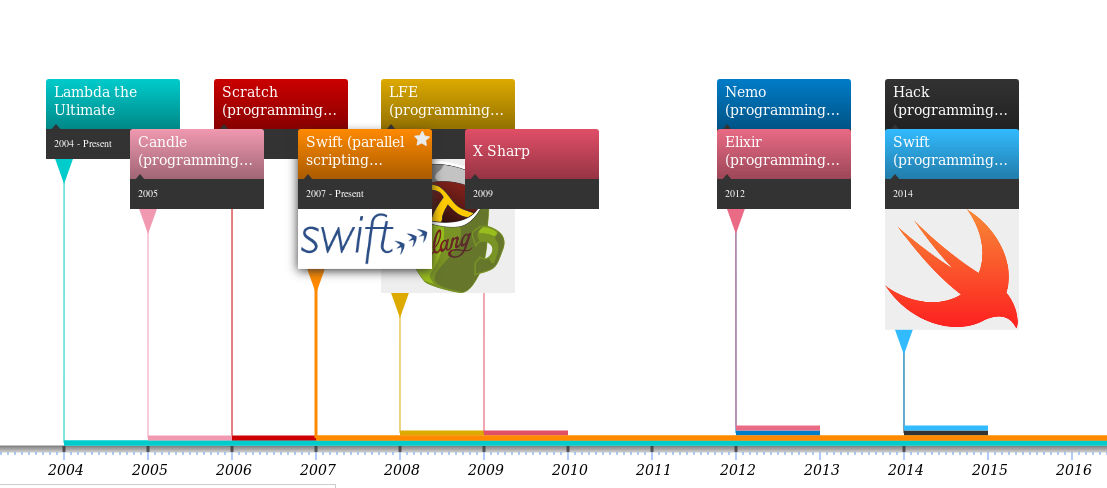

$ head /home/john/Downloads/query.csv

itemLabel,year

Amiga E,1993

Embarcadero Delphi,1995

Sather,1990

Microsoft Small Basic,2008

Squeak,1996

AutoIt,1999

Eiffel,1985

Eiffel,1986

Kent Recursive Calculator,1981

$ export HADOOP_HOME="..."

$ ./hive

hive> set hive.metastore.warehouse.dir=${env:HOME}/hive/warehouse;

$./hive

hive> set hive.metastore.warehouse.dir=${env:HOME}/hive/warehouse;

hive> create database mydb;

hive> use mydb;

$./hive

hive> use mydb;

hive> CREATE TABLE IF NOT EXISTS

proglang (name String, year int)

COMMENT "Programming Languages"

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

hive> LOAD DATA LOCAL INPATH '/home/john/Downloads/query.csv'

OVERWRITE INTO TABLE proglang;

$./hive

hive> SELECT * from proglang;

hive> SELECT * from proglang where year > 1980;

$./hive

hive> DELETE from proglang where year=1980;

FAILED: SemanticException [Error 10294]: Attempt to do update

or delete using transaction manager that does not support these operations.

$./hive

hive> set hive.txn.manager=org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

hive> DELETE from proglang where year=1980;

FAILED: RuntimeException [Error 10264]: To use

DbTxnManager you must set hive.support.concurrency=true

hive> set hive.support.concurrency=true;

hive> DELETE from proglang where year=1980;

FAILED: SemanticException [Error 10297]: Attempt to do update

or delete on table mydb.proglang that is not transactional

hive> ALTER TABLE proglang set TBLPROPERTIES ('transactional'='true') ;

FAILED: Execution Error, return code 1 from i

org.apache.hadoop.hive.ql.exec.DDLTask. Unable to alter table.

The table must be stored using an ACID compliant format

(such as ORC): mydb.proglang

$./hive

hive> use mydb;

hive> CREATE TABLE IF NOT EXISTS

proglangorc (name String, year int)

COMMENT "Programming Languages"

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n'

STORED AS ORC;

hive> LOAD DATA LOCAL INPATH '/home/john/Downloads/query.csv'

OVERWRITE INTO TABLE proglangorc;

FAILED: SemanticException Unable to load data to destination table.

Error: The file that you are trying to load does not match

the file format of the destination table.

$./hive

hive> insert overwrite table proglangorc select * from proglang;

hive> DELETE from proglangorc where year=1980;

FAILED: SemanticException [Error 10297]: Attempt to do update

or delete on table mydb.proglangorc that is not transactional

hive> ALTER TABLE proglangorc set TBLPROPERTIES ('transactional'='true') ;

hive> DELETE from proglangorc where year=1980;

hive> SELECT count(*) from proglangorc;

hive> SELECT count(*) from proglangorc where year=1980;

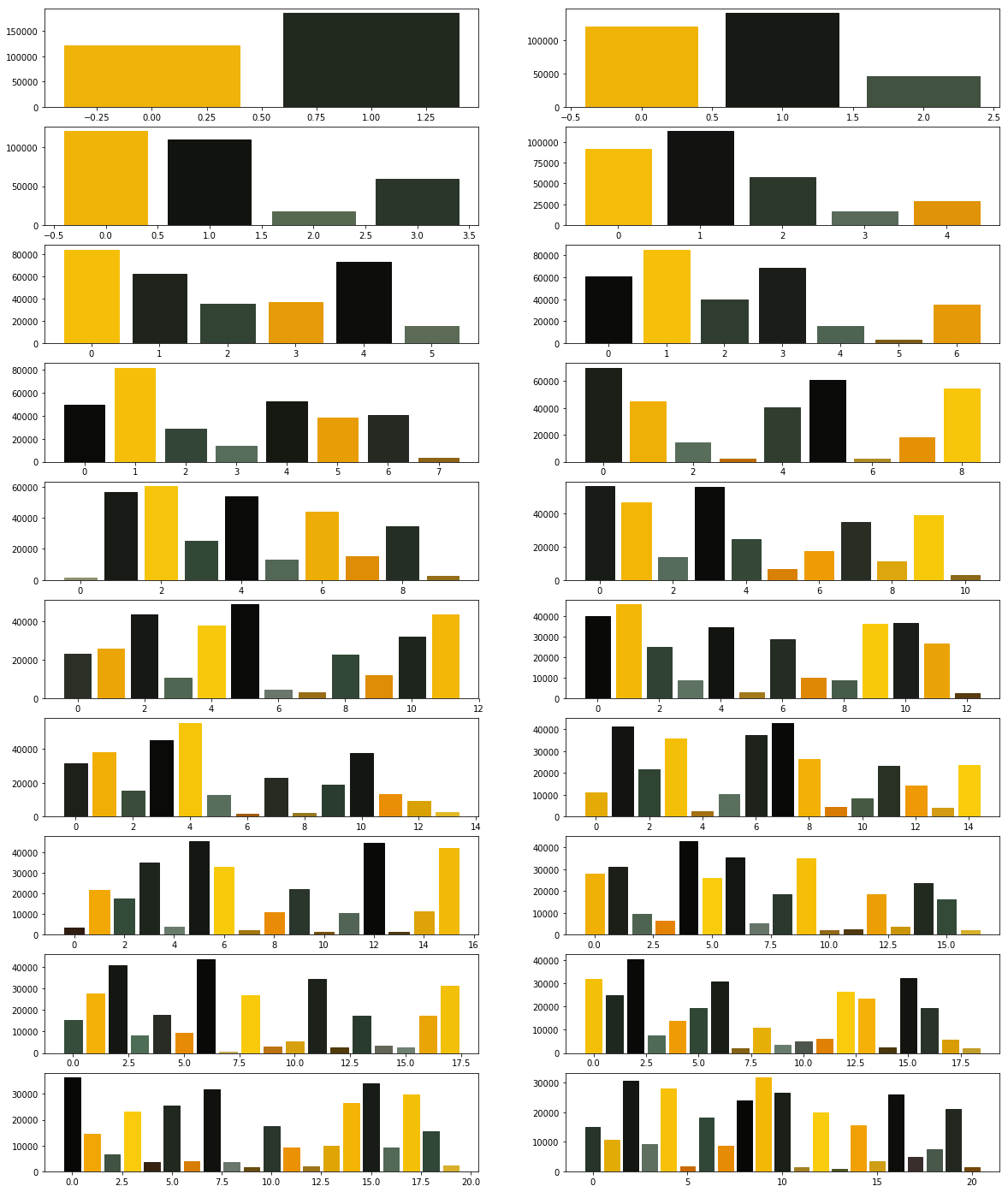

$./pyspark

>>> lines = sc.textFile("/home/john/Downloads/query.csv")

>>> lineLengths = lines.map(lambda s: len(s))

>>> totalLength = lineLengths.reduce(lambda a, b: a + b)

>>> print(totalLength)

$./pyspark

>>> lines = sc.textFile("/home/john/Downloads/query.csv")

>>> lineWordCount = lines.map(lambda s: len(s.split()))

>>> totalWords = lineWordCount.reduce(lambda a, b: a + b)

>>> print(totalWords)

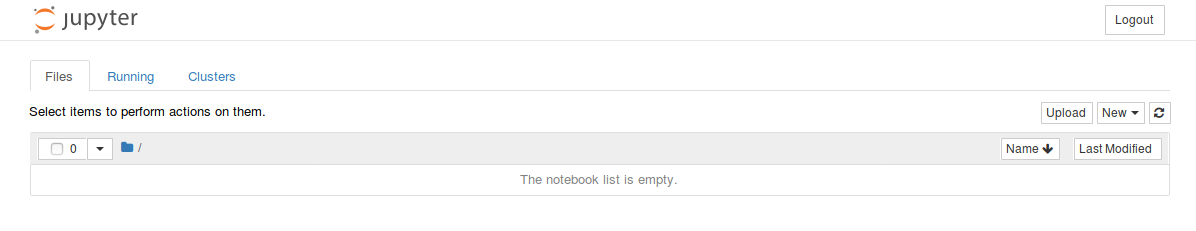

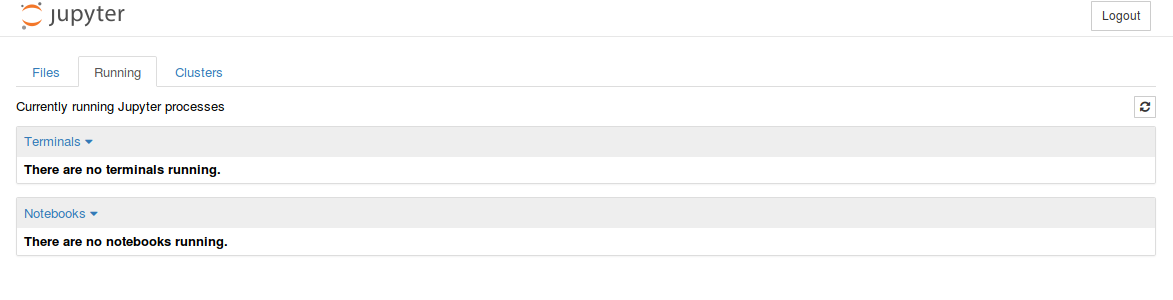

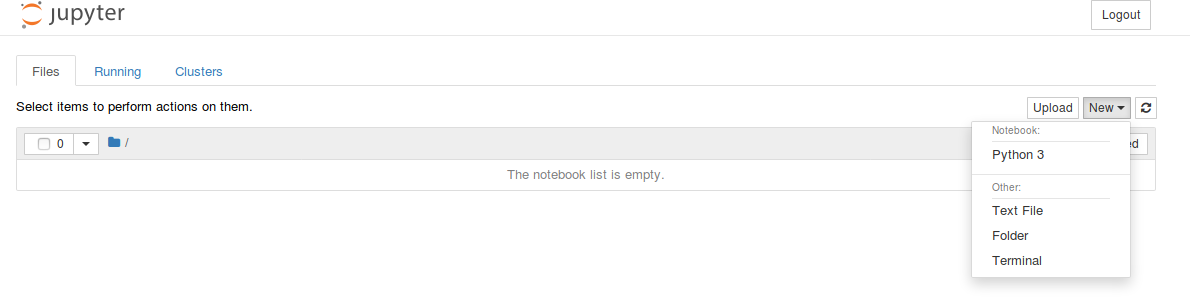

$ export SPARK_HOME='.../spark/spark-x.x.x-bin-hadoopx.x/bin

$ export PYSPARK_PYTHON=/usr/bin/python3

$ export PYSPARK_DRIVER_PYTHON=jupyter

$ export PYSPARK_DRIVER_PYTHON_OPTS='notebook'

$ ./pyspark

from pyspark.sql import HiveContext

sqlContext = HiveContext(sc)

sqlContext.sql("use default")

sqlContext.sql("show tables").show()

+--------+---------+-----------+

|database|tableName|isTemporary|

+--------+---------+-----------+

| default| proglang| false|

| default|proglang2| false|

+--------+---------+-----------+

result = sqlContext.sql("SELECT count(*) FROM proglang ")

result.show()

+--------+

|count(1)|

+--------+

| 611|

+--------+

print(type(result))

<class 'pyspark.sql.dataframe.DataFrame'>

import pandas as pd

result = sqlContext.sql("SELECT count(*) as count FROM proglang ")

resultFrame = result.toPandas()

print(resultFrame)

|count|

+-----+

| 611|

import pandas as pd

result = sqlContext.sql("SELECT * FROM proglang ")

resultFrame = result.toPandas()

groups = resultFrame.groupby('year').count()

print(groups)

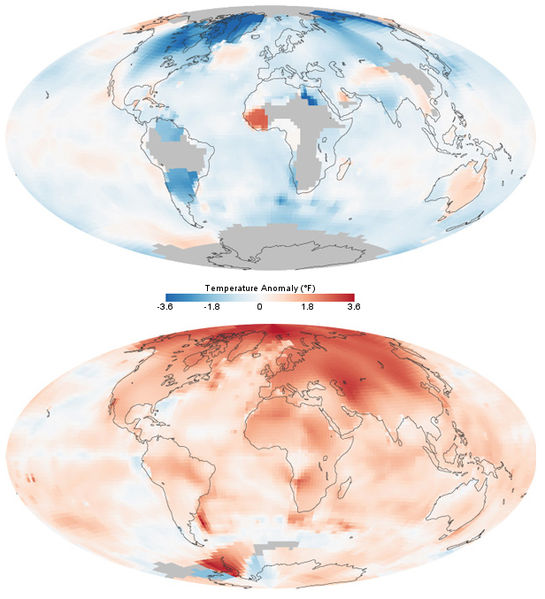

import nltk

nltk.download('vader_lexicon')

from nltk.sentiment.vader import SentimentIntensityAnalyzer

sia = SentimentIntensityAnalyzer()

sentiment = sia.polarity_scores("this movie is good")

print(sentiment)

sentiment = sia.polarity_scores("this movie is not very good")

print(sentiment)

sentiment = sia.polarity_scores("this movie is bad")

print(sentiment)

{'neg': 0.0, 'neu': 0.508, 'pos': 0.492, 'compound': 0.4404}

{'neg': 0.344, 'neu': 0.656, 'pos': 0.0, 'compound': -0.3865}

{'neg': 0.538, 'neu': 0.462, 'pos': 0.0, 'compound': -0.5423}

.jpg)

.png)